torch is definitely installed, otherwise other operations made with torch wouldn’t work, too. But I get the following error: AttributeError: module torch has no attribute permute.

#Permute pytorch code

So, I removed output_all_encoded_layers=False fromĮncoded_layers, pooled_output = self.bert(input_ids=sents_tensor, attention_mask=masks_tensor, output_all_encoded_layers=False). I tried to run the code below for training a sequence tagging model (didn’t list all of the code because it works fine). TypeError: forward() got an unexpected keyword argument 'output_all_encoded_layers' When I tried to use the same forward function to train the XLM-R - LSTM model, I got the following error It plans to implement swapaxes as an alternative transposition mechanism, so swapaxes and permute would work on both PyTorch tensors and NumPy-like arrays (and make PyTorch tensors more NumPy-like). Pre_softmax = self.hidden_to_softmax(output_hidden) PyTorch uses transpose for transpositions and permute for permutations.

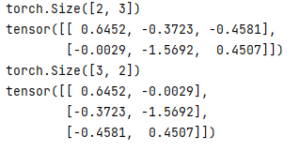

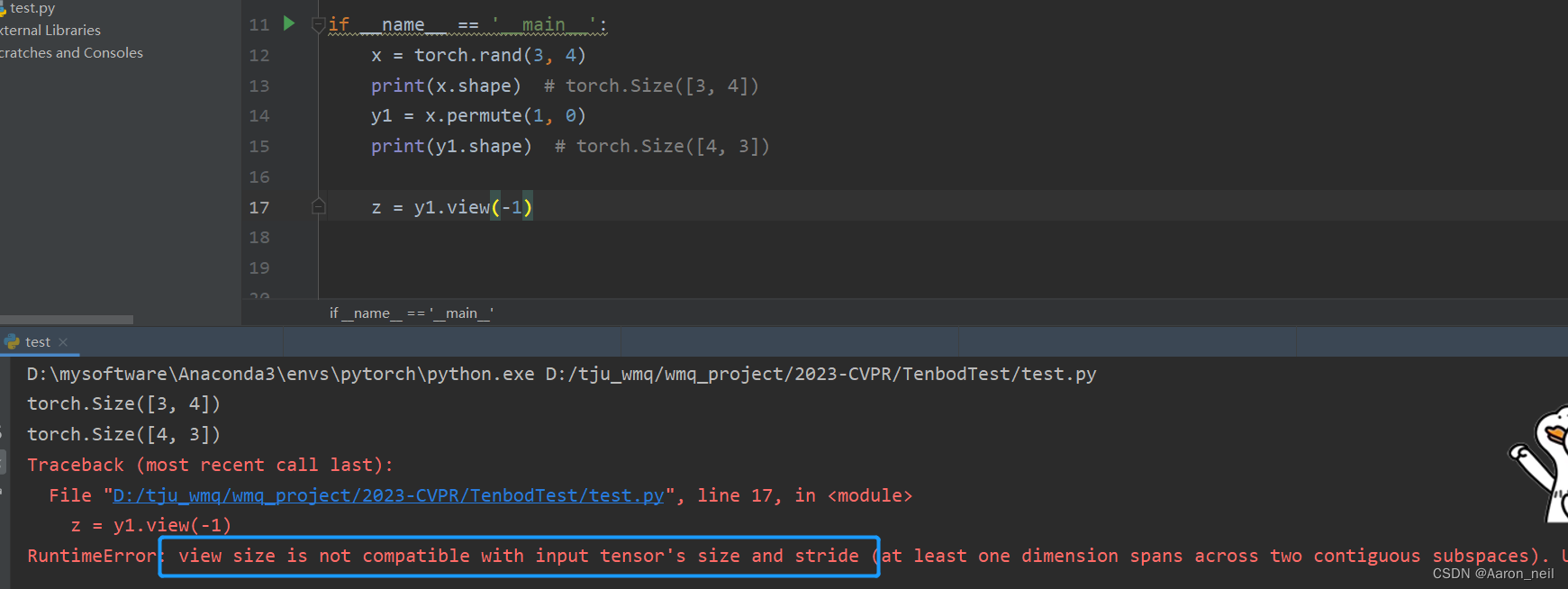

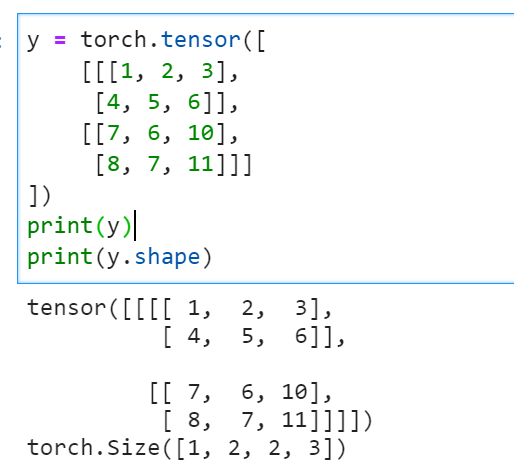

Output_hidden = self.dropout(output_hidden) Negative dim will correspond to unsqueeze () applied at dim dim + input.dim () + 1. A dim value within the range -input.dim () - 1, input.dim () + 1) can be used. Here, I would like to talk about view () vs reshape (), transpose () vs permute ().

Some of these methods may be confusing for new users. The returned tensor shares the same underlying data with this tensor. machine-learning 784 words 4 mins read times read PyTorch provides a lot of methods for the Tensor type. Output_hidden = torch.cat((last_hidden, last_hidden), dim=1) Returns a new tensor with a dimension of size one inserted at the specified position. Sents_tensor, masks_tensor, sents_lengths = sents_to_tensor(self.tokenizer, sents, vice)Įncoded_layers, pooled_output = self.bert(input_ids=sents_tensor, attention_mask=masks_tensor, output_all_encoded_layers=False)Įncoded_layers = encoded_layers.permute(1, 0, 2)Įnc_hiddens, (last_hidden, last_cell) = self.lstm(pack_padded_sequence(encoded_layers, sents_lengths)) The model was implemented with PyTorch and all experiments were run on an NVidia. The forward function of the BERT-LSTM is as follows. Permute Me Softly: Learning Soft Permutations for Graph Representations. The complete code of BERT-LSTM worked fine without any bugs. PyTorch torch.permute () rearranges the original tensor according to the desired ordering and returns a new multidimensional rotated tensor. Remove this cell containing instructions before making a submission or sharing your notebook, to make it more presentable.I’m trying to convert a BERT-LSTM model to XLM-R - LSTM model. IMPORTANT NOTE: Make sure to submit a Jovian notebook link e.g. "All about PyTorch tensor operations", "5 PyTorch functions you didn't know you needed", "A beginner's guide to Autograd in PyToch", "Interesting ways to create PyTorch tensors", "Trigonometic functions in PyTorch", "How to use PyTorch tensors for Linear Algebra" etc. Try to give your notebook an interesting title e.g. The recommended way to run this notebook is to click the "Run" button at the top of this page, and select "Run on Colab". Artificial Intelligence & ML Statistical modeling Advanced Visualizations Big Data Supporting. (Optional) Share your work with the community and exchange feedback with other participants permute and tensor.view in PyTorch Quick Navigation. If input is a (b times n times m) (b ×n×m) tensor, mat2 is a (b times m times p) (b ×m ×p) tensor, out will be a (b times n times p. input and mat2 must be 3-D tensors each containing the same number of matrices. Performs a batch matrix-matrix product of matrices stored in input and mat2. Inside ssd.py at this line there is a call to contiguous() after permute(). Embed cells from your notebook wherever necessary. torch.bmm(input, mat2,, outNone) Tensor. I am trying to follow the pytorch code for the ssd implementation (Github link). (Optional) Write a blog post on Medium to accompany and showcase your Jupyter notebook. Submit the link to your published notebook on Jovian here. Make sure to add proper explanations too, not just code. When set to True, the axis names in the output data layout are permuted according to perm, Otherwise, the input layout is.

Pick 5 interesting functions related to PyTorch tensors by reading the documentation,Įdit this starter template notebook to illustrate their usage and publish your notebook to Jovian using mit. The objective of this assignment is to develop a solid understanding of PyTorch tensors. Permutes the dimensions of the input according to a given pattern. Assignment Instructions (delete this cell before submission) Keras Core: Keras for TensorFlow, JAX, and PyTorch / Keras Core API documentation.

0 kommentar(er)

0 kommentar(er)